Most people doing accessibility testing use some sort of testing tools. They are great for quick validation, as a starting point for audits, and as part of continuous integration. Accessibility testing tools allow content authors to test fast and to test often: a “shift left” strategy. There are many accessibility tools out there, so deciding which ones are best for you can be a challenge. There are important considerations to be made, such as:

- How well does a tool fit in your existing workflow.

- How helpful is the feedback and documentation the tool provides.

- Does the tool support technologies you use, such as nested iframes, CSS media queries, shadow DOM, etc.

- Is the tool configurable and customizable to the extent you need.

- How well does the tool fit with your accessibility support requirements.

One of the harder aspects of comparing accessibility tools, is knowing exactly how they differ in their results. While most of them indicate they are testing the same accessibility standard (WCAG 2), exactly what each tool tests varies in significant ways, which can cause wildly different results. Automatic testing tools often do not provide clear descriptions of exactly what they test and why some things fail while others do not.

This lack of transparency is often intentional. Many vendors of automated accessibility tools treat their accessibility ruleset as a selling point for that product. Rules are treated as intellectual property, which they don’t want to give away by explaining in detail how their ruleset works. This can make it difficult to understand whether the automated accessibility tool actually does what you want it to do.

Accessibility Rules and Rulesets

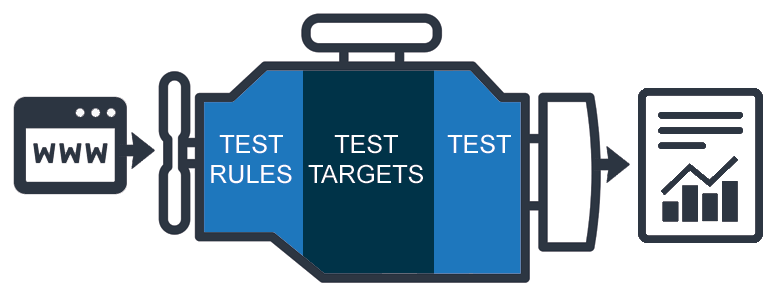

At the core of every automated accessibility tool is an accessibility engine. This engine pulls in different data sources, such as the web page DOM, its CSS, perhaps the HTTP messages transferred when loading a page. All of this data is then passed to scripts called “test rules.”

Each rule does two things. First, it finds all elements, component, attributes, etc. that should be tested for accessibility. These are often referred to as the “test targets”. The second step is then to run a small accessibility test on each of the test targets. For instance, a rule might look up the HTML element of a page and than test if that element has a validlang or xml:lang attribute.

This collection of accessibility test rules is called a ruleset. Most accessibility testing engines support one ruleset that can often be filtered into different categories. For example, a ruleset can have WCAG 2.0 rules, WCAG 2.1 rules, best practice rules, and a subset of all of those could also be Section 508 rules. These different configurations allow for a lot of variety in testing. Many differences in accessibility tools can be explained by which rules are enabled by default, and what is reported as a best practice, a warning, or a WCAG conformance issue.

A common question about automated accessibility tools is how many WCAG success criteria they test. This is somewhat of a misleading question. With the exception of criterion 4.1.1 Parsing, none of the WCAG 2.1 success criteria can accurately be reported on by automated testing alone. Instead, rules test a discrete part of a success criterion. This leads to some tools having a dozen or more rules for a single WCAG 2 success criterion. Success criteria can be broken down in different ways. What one tool does in a single larger rule, could be done over many smaller rules in another.

Transparency In A11y Tools

In recent years, automated accessibility tools have increasingly opened up their rulesets. The reasoning behind this shift is the idea that competition between automated accessibility tools should be about how useful and helpful a tool is, and not about how exactly test rules work. Transparency of rulesets can benefit users a lot. To start, it lets them make an informed decision about what tools to use and how to best configure them to fit their own approach to accessibility testing, rather than the tool’s default interpretation.

There are huge differences between automated accessibility tools in terms of accuracy and interpretation. For example, some tools might attempt text recognition in images and compare it to the text alternative. While others would consider such a test insufficiently accurate, so they do not use such a rule. Another example would be skipping heading levels. Most tools flag this as an issue, but some treat this as a conformance issue of WCAG 2, while others consider it a best practice.

Transparency in automated accessibility tools is helpful in understanding how a vendor interprets WCAG, but it still leaves a big unanswered question: if all of these tools reportedly test the same accessibility standard, why do they often disagree with each other? Additionally, what, if anything, can be done about it? Enter: the ACT Task Force.

W3C Harmonization of A11y Tools

The Accessibility Conformance Testing (ACT) Task Force was set up in 2016 to improve consistency between accessibility testers, both automated accessibility testing tools, and testing by experts and non-experts. Following on the trend of increasing transparency in automated accessibility tools, the ACT Task Force set out the following plan:

- Develop a shared format for ACT rules that can express test procedures for accessibility tools (such as axe-core or Pa11y) and testing methodologies (such as the Trusted Tester Program or RGAA).

- Help organizations express their ruleset in the ACT Rules Format.

- Collect ACT Rules, vet them for quality and publish them as a new W3C accessibility resource.

You can think of this ACT rules collection as WCAG Failure Techniques written for accessibility testers rather than for content authors. ACT rules don’t just benefit tool developers. They help anyone working on accessibility in a number of ways:

- ACT Rules come with a set of test cases. This lets anyone verify that an accessibility test method, automated or not, follows an accepted WCAG interpretation. If a test case says something should pass, and a tool says it shouldn’t, you know something has gone wrong.

- ACT Rules created a trusted baseline for acceptance testing. By specifying in advance of a web project what rules are expected to pass during acceptance testing, developers have a clear definition of done to work with. This can include best practice rules as well as rules for WCAG 2, for instance, to have all the page content in ARIA landmarks.

- ACT Rules establish a technology-specific description of accessibility requirements. This means that they can be used to answer some long-standing questions, such as: “Where WCAG 2 mentions headings, does that include table headings?”

ACT Rules In Your A11y Tools

As the work of the ACT Task Force develops, expect accessibility tools to start distinguishing which of their results are based on ACT rules published by the W3C, and which rules are unique to that particular tool. Knowing where automated accessibility tools overlap and where they don’t make it clear why differences exist between tools. This should take away a lot of the confusion that currently exists around automated accessibility tools.

There are a large number of vendors of automated accessibility tools involved in rule development, including AccessibilityOz, Deque Systems, IBM, Siteimprove, Level Access, The Paciello Group, and more. The work is also actively contributed to by developers of QA testing methodologies, including Trusted Tester, RGAA and Difi Key Indicators. Work on the ACT Rules is actively funded by the European Commission through the WAI-Tools project.

Harmonization Matters

Harmonization of accessibility testing is important. A lot of confusion and misconceptions exist around accessibility. Similar to how historically “yard” (the unit of length) had a slightly different meaning in different countries and even cities, “WCAG 2” is interpreted slightly differently in different places and by different organizations. With ACT Rules, we can define our yardstick, so that we all know how high the bar is.

ACT Rules aren’t going to make interpretations difference disappear, nor should it. Accessibility is about a diversity of people. The wide range of perspectives that come from that is a major building block needed to build a more inclusive web. However, this doesn’t mean we shouldn’t strive to find consensus and communicate clearly about where that consensus exists, and where it does not.

Learn more about the ACT Rules.

All ACT Rules are based on the ACT Rules Format.

If you’d like to write your own ACT rules, or are interested in implementation, the ACT Rules community group is the group that has written most of the ACT rules that will soon be published by the W3C. Join them.